[Teaching home]

ELL880: Special Topics in Computers I (Computational Learning Theory and the Mind)

If you are doing the course, please join the Piazza forum (the access code has been announced in class and e-mailed to you).

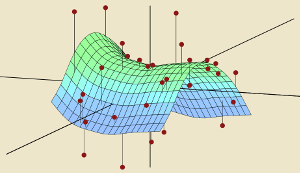

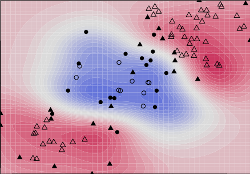

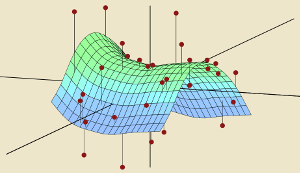

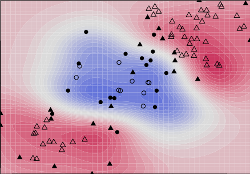

This course is fundamentally about the theory of machine learning: the principles on which the design and analysis of machine learning algorithms are based. There are many aspects to learning theory: the nature of hypothesis spaces, the notion of learnability, the nature of representations which allow for efficient learning, the theory of optimisation of parameters in a machine learning model, the bias-variance trade-off and the theory of regularisation, etc. We will seek to cover the key theoretical ideas which help us to understand the successes (and failures) of machine learning, and also point out the (many) gaps in our current understanding. The theoretical frameworks/notions discussed will include Empirical Risk Minimisation, VC dimension, PAC learning, Rademacher complexity, the Minimum Description Length principle, no-free-lunch theorems, statistical invariants, and some ideas from probability theory, information theory, and algebraic topology. We will also look at how learning theory can have practical utility in terms of error and generalisation bounds on the performance of learning algorithms, which in turn may help us to design better models and also address issues of data sufficiency.

There is, however, an even more exciting prospect for learning theory: it could help us better understand our own minds, and how we acquire knowledge. Historically, some advances in learning theory have been driven by trying to answer questions about what the necessary conditions must be for the mind to be able to learn what it does: for instance, how do we manage to learn the grammatical rules of language, from seemingly a rather limited number of data points? With the contemporary interest in deep learning and its apparent success at replicating human knowledge/concept acquisition in a variety of domains like vision and language, the promise of using learning theory to unravel the workings of the human mind is more potent than ever. However, there is limited work in this direction so far, as many pieces of the theoretical puzzle still are missing or not quite in place. A secondary strand of the course will be to explore some of the attempts that have been made to apply learning-theoretic ideas to human cognition, and discuss future prospects.

Instructor: Sumeet Agarwal

3 credits (3-0-0)

Pre-requisite: Any introductory machine learning course

II Semester 2018–19

Tu F 15:30–16:50, LH 612

Evaluation components

References

- Sanjeev Kulkarni and Gilbert Harman. An Elementary Introduction to Statistical Learning Theory. Wiley, 2011.

- Trevor Hastie, Robert Tibshirani, and Jerome Friedman. The Elements of Statistical Learning: Data Mining, Inference, and Prediction. Springer, 2nd Edition, 2009. [Free online]

- Michael J. Kearns and Umesh V. Vazirani. An Introduction to Computational Learning Theory. MIT, 1994.

- Shai Shalev-Shwartz and Shai Ben-David. Understanding Machine Learning: From Theory to Algorithms. Cambridge, 2014. [Free online]

- Bernhard Schölkopf and Alexander J. Smola. Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond. MIT, 2001.

- Mehryar Mohri, Afshin Rostamizadeh, and Ameet Talwalkar. Foundations of Machine Learning. MIT, 2nd Edition, 2018.

- Tomaso A. Poggio and Fabio Anselmi. Visual Cortex and Deep Networks:

Learning Invariant Representations. MIT, 2016.

- David J. C. MacKay. Information Theory, Inference, and Learning Algorithms. Cambridge, 2003. [Free online, and apparently better than Harry Potter, unless one speaks Welsh or Latin.]

- Leslie Valiant. Probably Approximately Correct: Nature's Algorithms for Learning and Prospering in a Complex World. Basic Books, 2013.

Planned schedule

| Serial no. |

Lecture hours |

Topics |

| 1 |

1–2 |

Introduction; the Statistical Learning Framework |

| 2 |

3–6 |

Empirical Risk Minimisation and Inductive Bias |

| 3 |

7–9 |

Probably Approximately Correct (PAC) Learning |

| 4 |

10–12 |

Learning via Uniform Convergence |

| 5 |

13–18 |

The No-Free-Lunch Theorem and the Bias-Complexity Trade-off |

| 6 |

18–20 |

Another notion of Learnability: Gold's Language identification in the limit and its implications for human language acquisition |

| 7 |

20–21 |

Philosophical reflections: The Problem of Induction |

| 8 |

21–27 |

The VC-Dimension and the Fundamental Theorem of Statistical Learning |

| 9 |

27–32 |

Nonuniform Learnability: Structural Risk Minimisation (SRM) and the Minimum Description Length principle |

| 10 |

33–35 |

Model Selection using SRM; Model Validation |

| 11 |

35–36 |

Student presentations |

| 12 |

37–40 |

The Kernel Trick and Learning with Kernels |

| 13 |

40–42 |

Neural Networks and Deep Learning: Expressive Power, Sample Complexity; Parallels between Deep Learning and the Visual Cortex |

[Image credits: towardsdatascience.com; github.io; rossintelligence.com.]

[Teaching home]